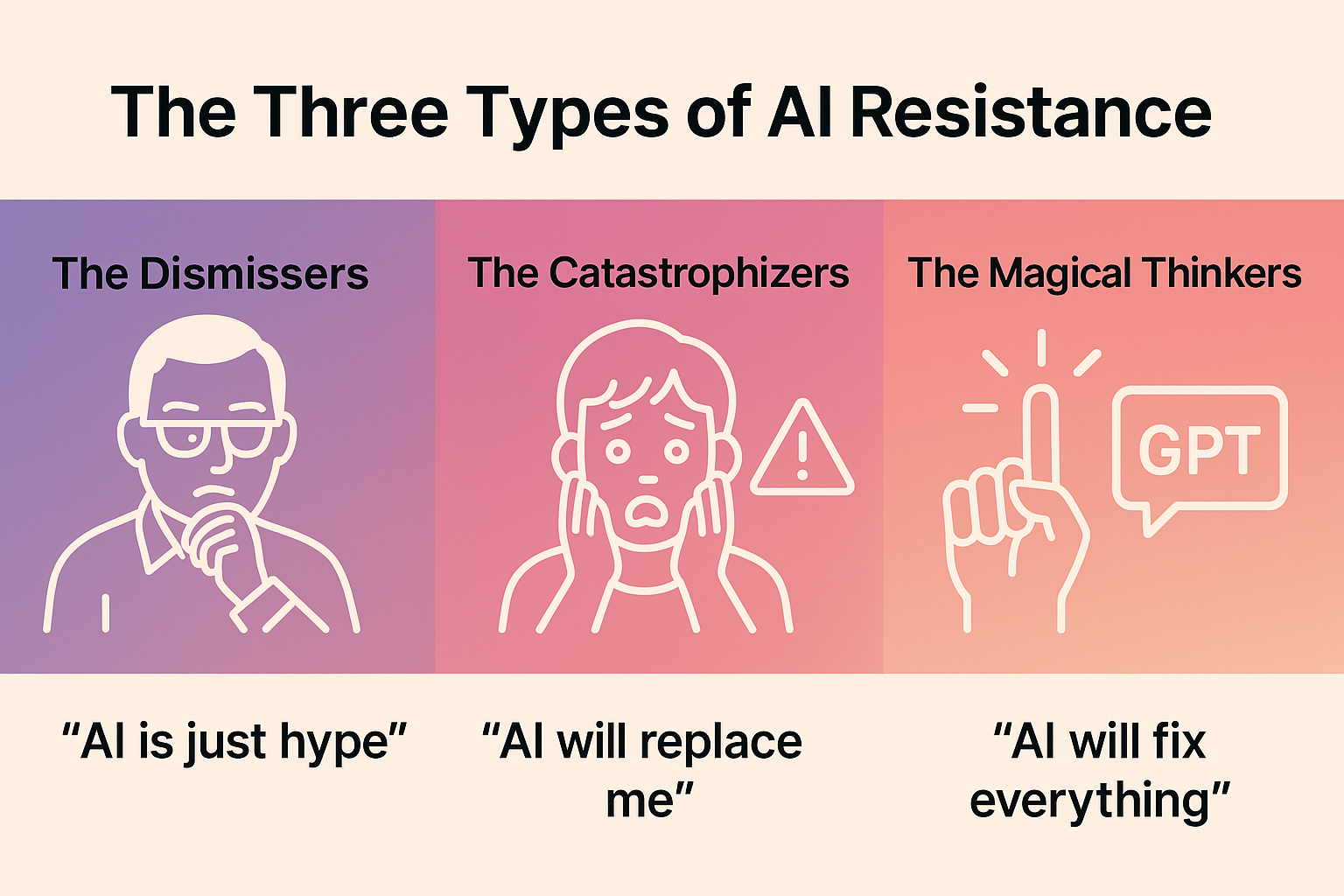

“Our developers think AI is just fancy autocomplete. Our executives think it’ll solve everything overnight. And everyone in between is convinced they’ll be replaced by a robot next week.”

This was what a fellow CTO shared with me last month. Three months into their AI transformation, they had all the technology figured out, but their people were falling apart.

After consulting with dozens of Swiss companies and workin in AI for many years (of course before LLM’s were a thing), I’ve seen this pattern repeatedly. The biggest barriers to AI success aren’t technical, they’re organizational.

Companies struggling with AI aren’t those with inadequate infrastructure or poor model performance. They’re the ones that haven’t figured out how to navigate three distinct types of human resistance patterns.

Here’s what I wish everyone responsible for AI in their company knew about the psychology of AI adoption.

Type 1: The Dismissers – “AI is just hype”

These are your most experienced people and your process gatekeepers. The senior experts who’ve seen every tech trend come and go, plus the compliance and QA teams who protect their authority by creating barriers to AI implementation.

What they sound like:

- It’s just pattern matching

- AI makes too many mistakes

- We need six months of compliance review first

- Our current process works fine

- What about data governance and audit trails?

- We’ve been doing this for 20 years without AI

Why this is dangerous

Dismissers create competitive blind spots and implementation paralysis. While they’re pointing out AI limitations or demanding endless compliance reviews, your competitors are achieving 20-30% productivity gains. I’ve seen companies miss entire market cycles because their senior experts convinced leadership that “AI isn’t ready yet” or their compliance teams demanded many month long approval processes.

The regulatory dismissers are particularly dangerous, they use legitimate governance concerns as weapons against progress. “We need complete audit trails” becomes a reason to never start. “Data privacy concerns” becomes an excuse to avoid any AI experimentation.

The numbers don’t lie: Companies with high AI adoption are seeing 3X higher ROI than those still “evaluating.” But Dismissers keep organizations stuck in evaluation mode.

The real psychology

Dismissers aren’t being stubborn, they’re protecting their professional identity and institutional power. When AI performs tasks they’ve mastered over decades, it feels like their expertise is being devalued.

The compliance dismissers add another layer: they’ve built careers on being the “voice of reason” who prevents disasters. AI represents uncontrolled risk to them, so they create elaborate barriers disguised as responsible governance.

What actually works

The reality? Sometimes you just need to move forward and prove the value

• Executive mandate when needed

Don’t wait for every skeptic to come around. Set clear expectations that AI adoption is a business priority.

• Start small and prove results:

Pick low-risk applications where success is measurable. Let the results speak louder than arguments.

• Leverage their expertise selectively

When Dismissers do engage, channel their critical thinking into useful feedback about implementation risks.

• Frame AI as tool enhancement

Position AI as making their existing expertise more valuable, not replacing it.

Type 2: The Catastrophizers – “AI will replace me”

These people read every AI headline as a threat. They’re paralyzed by fear and their anxiety spreads through teams like wildfire.

What they sound like:

- Why should I learn this if it’ll replace me?

- AI is taking over everything

- My job won’t exist in five years

- They’re just preparing us for layoffs

Why this is toxic

Catastrophizers create talent retention crises. I’ve seen entire engineering teams quit because rumors spread that “management is replacing us with AI.” Their fear becomes a self-fulfilling prophecy as productivity drops and stress increases.

Fear-based resistance is contagious. One panicked person can derail an entire team’s AI adoption.

The real psychology

Catastrophizers aren’t overreacting, they’re responding to uncertainty with worst-case thinking. Every AI announcement triggers their threat detection system. They imagine replacement scenarios that are statistically unlikely but emotionally vivid.

What actually works

Provide specific reassurance with real data

• Share realistic statistics

Only 2% of 2024 layoffs were actually attributed to AI. Most jobs are being transformed, not eliminated.

• Create learning paths

Show clear career progression routes in AI-augmented roles. Make the future tangible and positive.

• Build psychological safety

Create “no judgment” zones for AI experimentation. Celebrate learning from failures.

• Involve them in shaping the change

Let worried employees help design AI implementations. When they feel control, fear decreases.

Type 3: The Magical Thinkers – “AI will fix everything”

These people want to use AI for every possible task without any oversight or critical thinking. They treat AI outputs as gospel truth.

What they sound like:

- Let’s AI-enable everything

- Just ask ChatGPT

- AI doesn’t make mistakes like humans do

- We don’t need to check the output

Why this is dangerous

Magical Thinkers create liability time bombs. They’re behind the 85% AI project failure rate and drive organizations to deploy AI systems without proper testing, validation, or human oversight. The financial and reputational costs can be massive when AI systems fail in customer-facing or business-critical applications.

The real psychology

Magical Thinkers are drawn to AI’s capabilities but don’t understand its limitations. They experience “automation bias”, trusting AI recommendations without verification. It’s like giving someone a sports car without teaching them about brakes.

What actually works

Build critical thinking and governance structures

• Implement “Trust but Verify” protocols

Every AI output needs human review for important decisions.

• Create AI literacy programs

Teach people when AI works well and when it fails predictably.

• Establish clear boundaries

Define what AI can and cannot be used for in your organization.

• Start with low-risk applications

Prove AI works in safe environments before expanding to critical processes.

The Framework That Actually Works

Based on what I’ve seen succeed across dozens of implementations, there’s no one-size-fits-all approach. Sometimes you need executive mandate from the top. Sometimes you need grassroots proof points. The key is matching your strategy to your resistance patterns.

The Realistic Approach

For organizations dominated by Dismissers: You often need top-down executive mandate combined with quick, visible wins. Set realistic expectations upfront, “We’re not expecting AI to 10X our output. We’re looking for 20-30% efficiency gains in specific areas while keeping human expertise central.”

For Catastrophizer-heavy cultures: Lead with job security messaging and extensive upskilling programs. The transition takes 6-12 months, not weeks. Budget for the learning curve and temporary productivity dips.

For Magical Thinker environments: Implement strict governance from day one. Set conservative expectations: “AI will help us work faster, but human judgment remains critical for all important decisions.”

The Executive Reality Check

Here’s what I tell every C-suite team

You can have the best AI strategy and technology stack in the world, but if your people aren’t ready, you’ll fail.

The companies winning with AI spend 70% of their effort on organizational change, only 30% on technology implementation. They understand that AI transformation is fundamentally a people and process challenge that happens to involve sophisticated technology.

The cost of ignoring this is real, but the upside for getting it right is massive

- AI leaders see 3X higher ROI

- 20-30% productivity gains in AI-enabled areas

- Competitive advantages that compound over time

Key Takeaways for Leadership

🎯 Diagnosis before deployment

Identify your organization’s dominant resistance patterns before selecting AI tools or use cases

🏗️ Psychology first, technology second

Address fears, misconceptions, and power dynamics before rolling out new systems

⚡ Match strategy to resistance

Dismissers need executive mandate + quick wins. Catastrophizers need job security + gradual transition. Magical Thinkers need governance + realistic expectations

📊 Measure change, not just ROI

Track adoption rates, user confidence levels, and cultural shifts alongside technical metrics

🕒 Budget for the human transition

Plan for months organizational learning curves, not weeks technology rollouts

The companies that crack the human side of AI adoption will dominate the next decade. Those that keep treating it as purely a technical challenge will join the 74% struggling to get value from their AI investments.

The window for competitive advantage is still open, but it’s closing fast

What type of resistance are you seeing in your organization? And more importantly, what are you doing about it?