Statistical learning deals with the fundamental problem that we design algorithms based on empirical data and calculate the costs on this data to control the optimization process, while we want to calculate the expected costs but cannot.

Statistical learning theory is, therefore, the theory of how to model and design algorithms that operate on noisy random data.

Personal opinion

It’s a highly interesting course that has changed my perspective on many aspects of machine learning and even science in general.

It is always emphasized which fundamental problems current modeling and evaluation approaches have.

For instance, there is a great flaw with blindly using maximum likelihood estimation, it is the problem of typicality. The solution based on this technique is not necessarily the best among those we are considering, because it can be an atypical solution.

For example, if you flip an unfair coin, which has a probability of 0.7 for heads, according to the maximum likelihood method the most likely sequence is all heads, however, it already seems to be a wrong solution, and the reason is that it is untypical.

Another simple but often underestimated fact is, that algorithms that take random variables as input also produce random variables as output, and hence we must treat the output as such.

This course is one of the courses that have influenced my perspective on the field of machine learning the most, along with Advanced Machine Learning. In these courses, I not only learned about machine learning methods such as what a structured SVM is, but they also had an impact on how I think and work in this field and also on how I feel about everyday things.

Structure

The course includes a normal lecture, tutorials, weekly theory exercises, programming assignments, and a written final exam.

A general explanation of each part and what other courses I attended as a master student in computer science at ETH Zurich can be found here.

Programming Assignments

The programming assignments contribute 30 % of the final mark.

To be admitted to the exam one must achieve a grade of 4 in at least four exercises.

In total 7 assignments are offered throughout the semester, but the final grade of those assignments is calculated as an average of the best four.

The time to complete one assignment is two weeks and the assignments are published one after the other.

In each assignment, you have to implement and apply an algorithm presented in class. Typical other subtasks are comparisons with other algorithms, writing general explanations, or explaining the behavior for certain parameters.

The assignments are about the following topics:

- Sampling

- Deterministic Annealing

- Histogram Clustering

- Constant Shift Embedding

- Pairwise Clustering

- Mean Field Approximation

- Validation

Topics

The topics discussed in the course are:

- Maximum-entropy

- Introduction

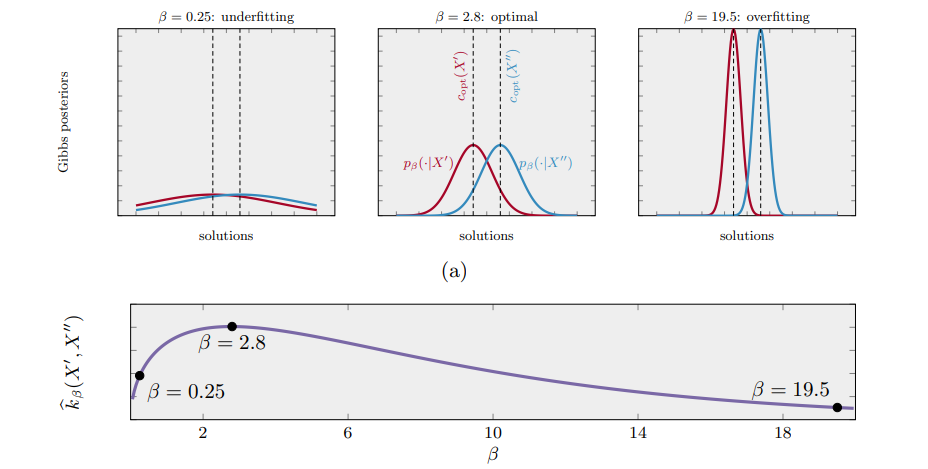

- Posterior Agreement

- Applied to clustering

- deterministic annealing

- histogram clustering

- parametric distributional clustering

- information bottleneck method

- constant-shift embedding

- pairwise clustering

- Mean-field approximation

- Model validation by information theory

Image source:

Posterior agreement for large parameter-rich optimization problems